Programming the ODROID-GO: Audio (Part 6)

The final step to having a full interface to all of the ODROID-GO’s hardware is to write an audio layer. Once that’s finished we’ll be able to start doing more generic game programming and less Odroid-specific programming. Any interaction with the peripherals will be through the Odroid functions.

Getting the sound working took the longest of everything done so far due to my lack of experience with audio programming and a lack of good documentation on the part of the IDF.

In the end, there actually wasn’t much code required to get sound to play. The majority of my time was spent figuring out how to lay out sound data in the way that the ESP32 wanted and how to configure the ESP32’s audio driver to align with the hardware configuration.

Digital Audio Basics

There are two parts to digital audio: recording and playing.

Recording

To record audio in a computer, we first need to convert it from a continuous (analog) space to a discrete (digital) space. This is done with an Analog-to-Digital Converter (ADC) (which we talked about when dealing with the D-pad in Part 2).

The ADC takes a sample of the input wave and digitizes the value which can then be stored in a file somewhere.

Playing

A digital sound file can be turned from a digital space back into an analog space with a Digital-to-Analog Converter. The DAC has a certain range of values it can reproduce. For example, an 8-bit DAC with a 3.3V voltage source could output analog voltages in the range of 0 to 3.3V in 12.9mV steps (3.3V divided by 256).

The DAC takes the digital values and converts them back into a voltage which can be sent to an amplifier or speaker or some other device expecting analog sound waves.

Sample Rate

When the analog audio is recorded with the ADC, samples are taken at a certain rate, with each sample being a snapshot of the sound wave at a point in time. This is called the Sample Rate with units of Hertz.

The greater the rate at which we sample, the more faithfully we can capture the frequencies in the original signal. The Nyquist-Shannon sampling theorem says (basically) that you must sample at twice the rate of the highest frequency that you wish to capture.

The human ear can hear approximately in the range of 20Hz to 20KHz, and so a common sampling rate for reproducing high quality music is 44.1KHz which is slightly over twice the highest frequency the human ear can pick up. That ensures the full range of instrumental and vocal frequencies are captured.

Each sample will take up space in a file though, so we can’t just sample as high as possible. But you lose important information if you don’t sample fast enough. The sample rate that you choose must depend on what frequencies are present in the audio you’re capturing.

When playing, you must match the sample rate of the source or your sound and length will be off.

Consider ten seconds of audio recorded with a sampling rate of 16KHz. If you play at 8KHz, the perceived pitch will be lower and the length will be twenty seconds. If you play at 32KHz, the perceived pitch will be higher and the length will be five seconds.

This video demonstrates the difference in sample rates with examples.

Bit Depth

Sample rates are only half of the equation. There is also Bit Depth, which is the number of bits per sample.

When the ADC captures the sample of the audio wave, it must turn that analog value into a digital one, and the range of values that can be captured depends on how many bits are used. 8-bit (256 values), 16-bit (65,526 values), 32-bit (4,294,967,296 values), etc.

The number of bits per sample is related to the Dynamic Range of the audio: i.e., the difference between the loudest and quietest parts. The most common bit depth with music is 16 bits.

When playing, you must match the bit depth of the source or your sound and length will be off.

Consider if you had an audio file with four samples stored as 8-bit: [0x25, 0xAB, 0x34, 0x80]. If you tried to play them as if they were 16-bit, you would only have two samples: [0x25AB, 0x3480]. This would not only cause your audio samples to be the incorrect value, but you halved your sample count and thus halved your audio length.

It also important to know the format of your samples. 8-bit unsigned, 8-bit signed, 16-bit unsigned, 16-bit signed, etc. It is common for 8-bit to be unsigned and 16-bit to be signed. If you get them wrong your audio will be very incorrect.

This video demonstrates the difference in bit depths with examples.

WAV Files

The common way to store raw audio in a computer is the WAV Format which has a simple header describing the format of the audio (sample rate, bit depth, size, etc), followed by the actual audio data.

The audio is not compressed at all (unlike something like MP3) which makes it easy for us to play without needing a codec library.

The major issue with WAV files is that, because they are not compressed, they can become quite large. The file size is directly related to the duration, the sample rate, and the bit depth.

Size = Duration (secs) x Sample Rate (samples/sec) x Bit Depth (bits/sample)

The largest contributor to the file size is the sample rate, so the easiest way to save on space is to choose one that is relatively low. We’re going for an old school sound, so using a low sample rate will work just fine.

I2S

The ESP32 has a peripheral that makes interfacing with audio hardware relatively painless: Inter-IC Sound (I2S).

The I2S protocol is relatively simple with only three signals: a clock, a channel select (left or right), and the actual data line.

The clock rate is based on the sample rate, the bit depth, and the number of channels. The clock ticks once for each bit of data, so the clock rate must be set appropriately for the audio to play correctly.

Clock = Sample Rate (samples/sec) x Bit Depth (bits/sample) x Channel Count

The ESP32’s I2S driver has two possible modes: it can either output the data to pins which are connected to an external I2S receiver that can decode the protocol and pass the data on to an amp, or it can send the data to the ESP32’s internal DAC which will then output an analog signal that can be sent to an amplifier.

The ODROID-GO doesn’t have any sort of I2S decoder on-board, so we must use the ESP32’s internal DAC which is only 8 bits, meaning that we must use 8-bit audio. There are two DACs, one connected to IO25 and one connected to IO26.

The procedure looks like this:

- We send audio data to the I2S driver

- The I2S driver sends the audio data to the 8-bit internal DAC

- The internal DAC outputs an analog signal

- The analog signal is sent to an audio amplifier

- The audio amplifier sends its output to the speaker

- The speaker makes noise

The Circuit

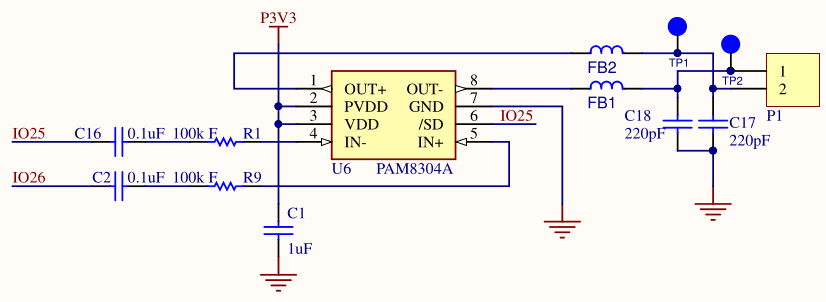

If we look at the audio circuit on the ODROID-GO Schematic, we see two GPIO pins (IO25 and IO26) connected to the inputs of an audio amplifier (PAM8304A). IO25 is also connected to the /SD signal of the amplifier, which is a pin that will enable or disable the amplifier (low signal meaning disable). The outputs of the amplifier are connected to a single speaker (P1).

Remember that IO25 and IO26 are the outputs of the ESP32’s 8-bit DACs, so one DAC is connected to IN- and the other DAC is connected to IN+.

IN- and IN+ are the audio amplifier’s differential inputs. Differential inputs are a way of reducing noise due to Electromagnetic Interference (EMI). Any noise present on one signal will also be present on the other. One signal is substracted from the other and that removes the noise.

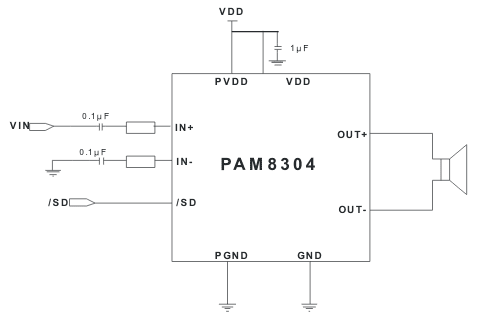

If we look at the audio amplifier datasheet, there is a Typical Applications Circuit which is the suggested way to use the amplifier from its manufacturer.

They suggest connecting IN- to ground, IN+ to an input signal, and /SD to a toggle signal. If there were a noise of 0.005V present, then IN- would read 0V + 0.005V and IN+ would read VIN + 0.005V. The inputs would then subtract from each to get the true signal value (VIN) without the noise.

However, the designers of the ODROID-GO did not follow this recommended configuration.

Looking again at the ODROID-GO schematic, we see that the designers hooked a DAC output up to IN- and that same DAC output is connected to /SD. /SD is an active-low shutdown signal, so we must set it high if want the amplifier to be operational.

That means to use the amp we must not use IO25 as a DAC but instead as an always-high GPIO output. But that also then sets IN- high which is not what the amplifier datasheet recommends (it should be ground). We must then use the DAC that is connected to IO26 as our I2S output to be fed into IN+. This does not achieve the expected noise-elimination because IN- is not connected to ground. There is a constant soft fuzz of noise coming out of the speakers at all times.

We have to make sure we configure the I2S driver correctly because we only want to use the DAC that is connected to IO26. If we used the DAC connected to IO25, it would repeatedly trigger the amplifier’s shutdown signal and cause our audio to sound awful.

In addition to that weirdness, when using the 8-bit internal DAC, the I2S driver on the ESP32 requires that it is provided with 16-bit samples, but it only sends the upper byte onto the 8-bit DAC. So we must take our 8-bit audio and put it into a buffer twice as large with half of the buffer empty. Then we give that to the I2S driver and it sends the MSB of each sample to the DAC. Unfortunately that means we must pay for 16 bits but only get to use 8 bits.

Multitasking

Unfortunately we cannot keep the game running on a single core like I had initially wanted because of what seems to be a bug in the ESP32’s I2S driver.

The I2S driver is supposed to use DMA (like the SPI driver does), meaning that we should be able to just initiate an I2S transfer and then continue on with our work while the I2S driver carries out the data transfer of the sound data.

But instead the CPU is blocked for the duration of the sound which is not feasible for a game. Imagine pressing the jump button and then your player sprite pauses in motion for 100ms while the jump sound plays.

To solve this problem, we can take advantage of the fact that the ESP32 has two cores on board. We can create a task (i.e., a thread) on the second core that will be in charge of playing audio. That way we can pass an audio buffer pointer from the main game task to the audio task, and the audio task will initiate the I2S transfer and block while the sound plays. But our main task on the first core (with input-handling and rendering) will continue on without being blocked.

Initialization

With that knowledge in hand, we can properly initialize the I2S driver. It only takes a few lines of code, but the challenge is figuring out which parameters to set to get the audio to play correctly.

| |

We first configure IO25 (which is connected to the amp’s shutdown signal) as an output so that we can control the audio amplifier, and we set it high to enable the amp.

Next we configure and install the actual I2S driver. I’ll break down each configuration bit line by line because each requires some explaining.

mode

- We set the driver to be master (controlling the bus), a transmitter (we’re sending data out to receivers), and to use the built-in 8-bit DAC (because we don’t have an external DAC on the ODROID-GO board).

sample_rate

- We use a sample rate of 5512 because it’s the lowest possible sample rate provided by the tool that we’ll use to generate sound effects. It’s low enough that it saves on space and execution time, but can generate a good enough range of frequencies for a simple game. Following the Nyquist-Shannon theorem, with that sample rate we can reproduce frequencies up to ~2500Hz.

bits_per_sample

- As mentioned earlier, the internal DAC of the ESP32 is 8-bit, but the I2S driver requires that we send it 16 bits for every sample and it then sends the upper 8 to the DAC.

communication_format

- The documentation doesn’t explain this parameter well at all, but I assume it has something to do with our 8-bit data being stuffed into the MSB of the 16-bit buffer.

channel_format

- The GPIO pin connected to the IN+ signal of the audio amplifier is IO26 which maps to the “left” channel of the I2S driver when using the internal DACs. We only want I2S to write to that channel because the right channel is mapped to IO25 which is connected to the amp shutdown signal and we don’t want that to be toggled on and off.

dma_buf_count and dma_buf_len

- These are the number of DMA buffers and the length (in samples) of each buffer, but I found no good information on how to set them, so I used the values present in the IDF documentation’s example. When I changed them to other values I noticed odd effects in the audio.

Next we create a queue which is the FreeRTOS way of sending data between tasks. You put data into the queue from one task and take it out of the queue in a separate task. We create a struct called QueueData that bundles up the audio buffer pointer and the buffer length into a single structure that can be placed into the queue.

Finally we create the task that runs on the second core. We hook it up to the PlayTask function, which does the actual playing. The task itself is an infinite loop that repeatedly checks if there is any data in the queue. If there is, it sends it off to the I2S driver so that it can be played. It will block on the i2s_write call, and that’s fine because it occurs on a separate core from our main game thread.

The call to i2s_zero_dma_buffer is required so that there is no residual audio playing from the speakers once a play is complete. I’m not sure if this is a bug in the I2S driver or expected behavior, but without it the speaker emits garbage after the audio buffer has finished playing.

Playing Audio

| |

The actual function call to play an audio buffer is extremely simple with all of the set up finished because the main work is done in the other task. We stuff the buffer pointer and buffer length into a QueueData struct and put it in the queue to be consumed by PlayTask.

A consequence of all of this is that one audio buffer must finish playing before a second can begin. So if a jump and a shoot occur at the same time, whichever sound happened first would play before the second, rather than at the same time.

What we will likely do in the future is mix the various sounds from a frame into the audio buffer that is sent to the I2S driver. That would allow multiple sounds to effectively be played at the same time.

Demo

We’ll generate our own sound effects with jsfxr which is a tool designed specifically to generate the sort of game sounds we’re looking for. We can directly control the sample rate and the bit depth, and then output a WAV file.

I created a simple jump sound effect that sounds a bit like Mario’s jump. It has a sample rate of 5512 (like we configured during initialization) and a bit depth of 8 (required due to the 8-bit DAC).

Rather than parse a WAV file directly in our code, we’ll do something similar to the sprite loading in Part 4’s demo and remove the WAV header from the file using a hex editor. That way the file read from the SD card is just the raw bytes. We’ll also hard-code the sound length rather than read it dynamically. This is not how we will load sound assets in the future but it works well enough for the demo.

You can get the raw file here.

| |

We load the 8-bit data into the 8-bit soundEffect buffer, and then copy that data into the 16-bit soundBuffer buffer with the data in the upper eight bits. Again, this is required due to the idiosyncracies of the IDF.

With a 16-bit buffer now created, we can play the audio with the press of a button. The volume button seems like a good choice.

| |

We track the state of the button to ensure that we don’t accidentally call Odroid_PlayAudio more than once with a single button press.

Source Code

You can find all of the source code here.

Further Reading

- ODROID-GO Schematic

- Audio Amplifier Datasheet

- ESP-IDF Documentation: DAC

- ESP-IDF Documentation: I2S

- WAVE File Format

- Video: Bit Depth Explanation

- Video: Sample Rate Explanation

Last Edited: Dec 20, 2022